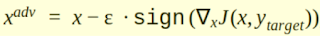

The boost in computing power and easy availability tools since 2012 have seen a rise of formation and usage of Deep Learning Models. While many are still busy limiting themselves into building new Models or improving accuracy, they are unaware of the lying attacks which seem to deteriorate or hamper there model. Here we are going to discuss the Adversarial attack, it carries a technique of misclassifying the input image of a Deep Learning model. Subtle perturbations to input image which is far to be perceived normally can largely differ or fool the model to predict incorrect outputs. Perturbations are noise that is added to a clean image to make it an adversarial example. The adversary performs either White Box or Black Box attacks. Former attack expects an adversary to know the architecture, inputs, outputs of the model. While later limits adversary to know only inputs and outputs of the model. Considering Targeted Fast Gradient Sign Method to show the attack: We have ta...